James Muldoon’s new field study dispels the romantic myth of a self‑sufficient, autonomous artificial intelligence. Drawing on interviews from Kenyan content moderators to Icelandic data‑centre managers and Irish voice artists, his book reframes generative AI not as a mysterious invention but as a global extraction system that consumes human labour, creativity and environmental inputs.

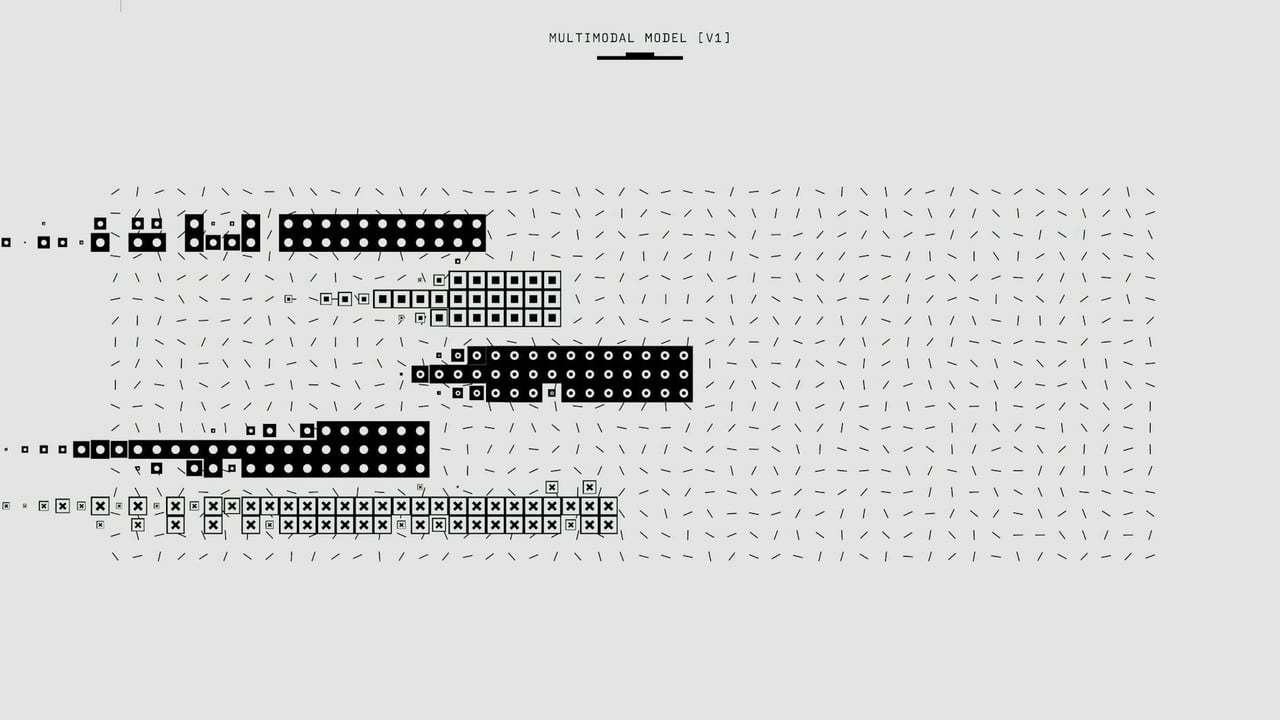

Muldoon documents concrete human costs behind the lines of code: moderators who repeatedly view violent imagery until traumatised, voice actors whose recordings were repurposed without consent, and armies of data‑labelers in low‑cost regions preparing datasets. He also traces the physical infrastructure — energy‑hungry data centres, cooling systems and transcontinental fibre — that keeps large language models running, emphasising that models’ outputs are built on material foundations rather than ethereal algorithms.

The extraction Muldoon describes sits on a long history of capitalist outsourcing and platform work, but with important intensifications. Unlike earlier waves of offshored manufacturing or call‑centre labour, AI systems absorb entire canons of creative work at scale: millions of books, articles, images and songs are harvested to generate products that are then monetised by firms, often without authorial consent or compensation.

That dynamic is enabled by modern connectivity and automated supply chains. Muldoon highlights milestones such as the arrival of high‑capacity fibre to East Africa, which opened a new pool of remote labour able to perform annotation and moderation for western platforms. Because training and labeling tasks can be routed digitally, the marginal cost of shifting labour between providers is low, producing a relentless global competition that pressures wages and working conditions.

Energy and water footprints amplify the geopolitical stakes. Data centres running AI workloads can consume water at the scale of a small town and require continuous, large‑scale electricity purchases. Muldoon reports that racks running training workloads can demand several times the power of earlier enterprise customers, prompting tech firms to sign long‑term power purchase agreements or invest directly in generation — moves that reconfigure local energy markets and raise environmental concerns.

The labour consequences are paradoxical. Advanced robotics remain expensive and context‑limited, so workers remain indispensable; yet AI tools are already deskilling white‑collar roles and compressing pay. Management retains systemic knowledge and control while frontline workers become interchangeable cogs, subject to faster output quotas and precarious contracts. The result is not wholesale automation but intensified exploitation and inequality within workplaces.

China sits at the centre of this contested geography but follows a distinctive path. Whereas US firms often offshore annotation to cheaper foreign providers, China tends to internalise data‑label work within its domestic regions, aligning private firms with provincial development policies and state actors. That corporatist model reduces the leakage of sensitive data but also channels labour and infrastructural burdens into inland provinces under government‑guided industrial policy.

Muldoon warns of a quieter form of cultural domination: data colonialism. Large models trained primarily on English‑language, western‑centric content reproduce and amplify those worldviews, marginalising knowledge and practices that never entered the digitised record. For users worldwide, that means AI will not just automate tasks but shape which facts and narratives are readily accessible.

The policy implications are wide: copyright litigation is already testing the industry’s business model, energy planning must reckon with massive new loads, and labour regulation needs to catch up with digitally distributed, highly monitorable work. Muldoon’s account argues that understanding these supply chains is a necessary first step for any regulatory or collective‑action strategy that seeks to make AI’s gains less extractive and more equitable.

The book reframes the central AI question from ‘‘can machines think?’’ to ‘‘who pays the cost of machine thinking?’’ For governments, unions and civil society, the immediate task is to map the hidden inputs — human, material and ecological — that produce today’s models, and to decide how the benefits should be distributed.