OpenAI has begun work on a social network that aims to eliminate the plague of automated accounts that have long distorted online discourse. The project is in an early stage and is being developed by a small team of fewer than ten people; it is explicitly intended to extend the reach of ChatGPT and OpenAI’s image generator Sora while offering a platform for strictly human users.

The impetus is familiar: flagship platforms such as X (formerly Twitter) have struggled for years with vast armies of bots that inflate cryptocurrency scams, amplify polarising content and undermine trust in what is on the screen. After Elon Musk’s takeover and the rebranding to X, the company stepped up removals — wiping about 1.7 million bot accounts in 2025 alone — but the problem has proved stubborn and recurrent.

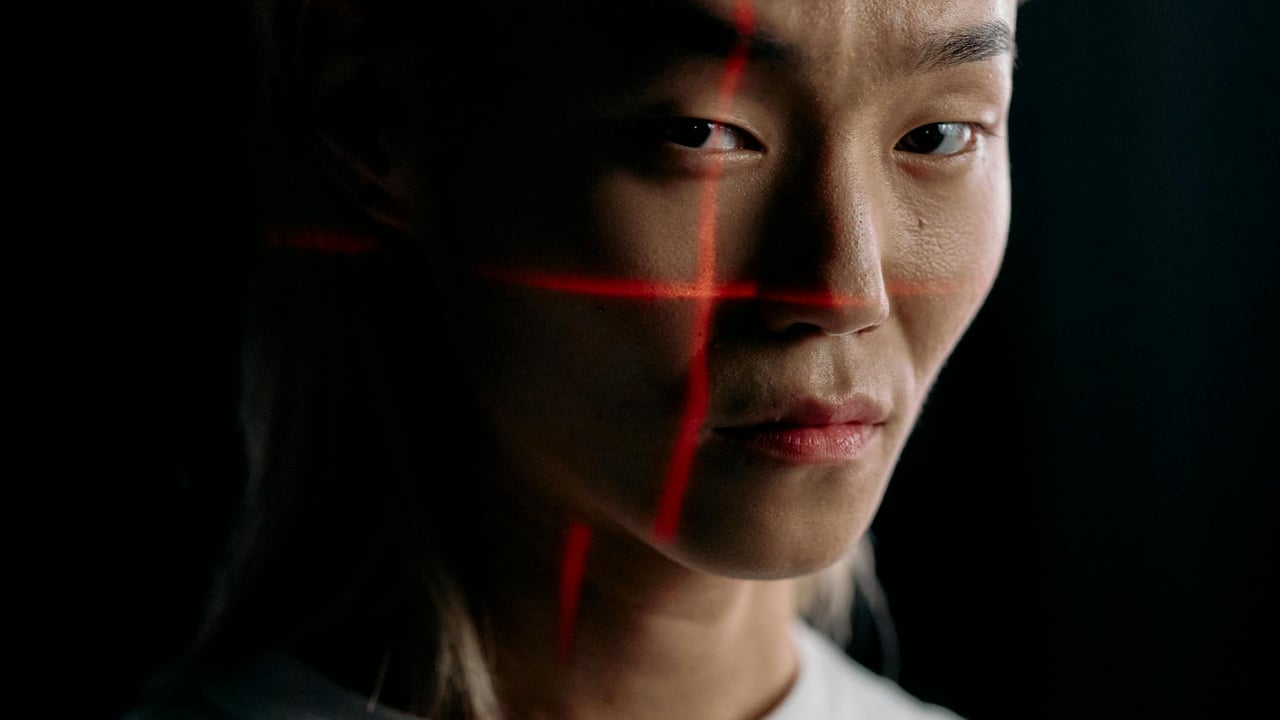

OpenAI’s designers are exploring a novel enforcement tool: biometric proofs of personhood. The team has discussed using Apple’s Face ID or an iris‑scanning device called World Orb to verify that each account is tied to a living human rather than software. World Orb is operated by Tools for Humanity, a company founded by OpenAI CEO Sam Altman, who also serves as its chairman.

Biometric checking — especially if tied to hardware such as Face ID or iris scanners — promises a far stronger guarantee of “realness” than the phone‑number or email checks that Facebook and LinkedIn have long used. It would make large‑scale, automated masquerade far harder and could materially raise the cost of running influence operations and scam networks.

But the approach carries sizable privacy and security trade‑offs. Privacy advocates warn that immutable biometric identifiers such as an iris present a single point of catastrophic failure: if scans are stolen, they cannot be reissued like a password. The centralisation of such sensitive data would attract regulators, litigation and adversaries seeking to weaponise identification information.

If OpenAI pushes forward, it will confront entrenched incumbents — X, Instagram and TikTok — as well as a skeptical public. The move would also force a broader conversation about whether friction and stronger identity checks are acceptable in exchange for fewer bots and higher signal‑to‑noise online. Technical success does not guarantee adoption: users, platform partners and regulators will judge any biometric model on criteria far beyond bot reduction.

The project is a statement of intent. It signals that leading AI developers see the integrity deficits in existing social media as both a market opportunity and a reputational risk. How OpenAI balances user safety, growth ambitions and the ethical limits of biometric surveillance will test assumptions about what a trustworthy internet can and should look like.