# TPU

Latest news and articles about TPU

Total: 3 articles found

Google Doubles Down on AI Compute: $175–185bn CapEx, Gemini Adoption, and a Cloud Surge

Alphabet reported strong 2025 results and announced a dramatic increase in 2026 capital expenditure — $175–185 billion — to scale AI compute and data‑centre capacity. Gemini 3 adoption, a 48% jump in cloud revenue, and falling model service costs underpin management’s argument that the spending is necessary to meet surging AI demand.

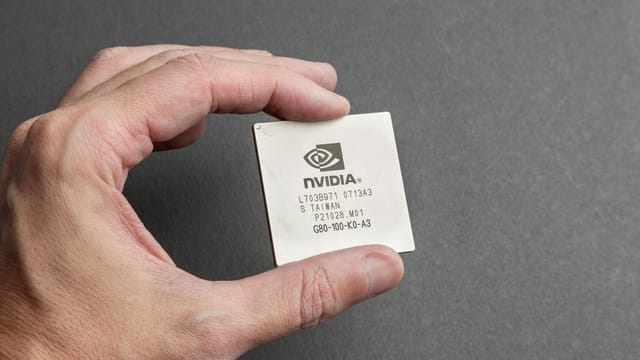

Microsoft’s Maia 200 Raises the Stakes in the Cloud AI Chip War

Microsoft has started deploying its Maia 200 AI accelerator built on TSMC 3nm, claiming substantial performance and cost advantages versus Amazon’s Trainium and Google’s TPU. The chip — designed to run large models efficiently at low power — is part of Microsoft’s strategy to secure more predictable, cheaper AI compute for Azure and to lessen reliance on Nvidia. An SDK preview is available to developers, while broader cloud rental availability is promised for the future.

Microsoft Unveils Maia 200 — A 3nm AI Inference Chip Aimed at Denting NVIDIA’s Dominance

Microsoft has launched Maia 200, a TSMC 3nm AI inference chip the company says outperforms Amazon’s Trainium v3 and Google’s TPU v7 on low-precision workloads while improving inference cost-efficiency by about 30% versus its current fleet. The release underscores hyperscalers’ push into custom silicon to reduce reliance on Nvidia GPUs, but success will depend on software tooling, ecosystem adoption and independent benchmarking.