Xiaomi’s robotics group has released TacRefineNet, a tactile-driven pose‑refinement framework that can correct imprecise grasps to millimetre accuracy without relying on cameras or pre-existing 3D models of objects. The team demonstrated the method in both simulation and physical trials, including handling parts typical of automotive assembly, and published technical details and experimental footage alongside an announcement that additional work will follow.

TacRefineNet uses only touch feedback to iteratively adjust a robot’s end‑effector pose after an initial, imperfect grasp. The model reduces average position error from a diverse set of misaligned grasps down to millimetre levels within a few refinement steps, the team reports. That performance held up in simulated environments and on real hardware, signaling that the approach is robust to the kinds of variability and sensor noise found on factory floors.

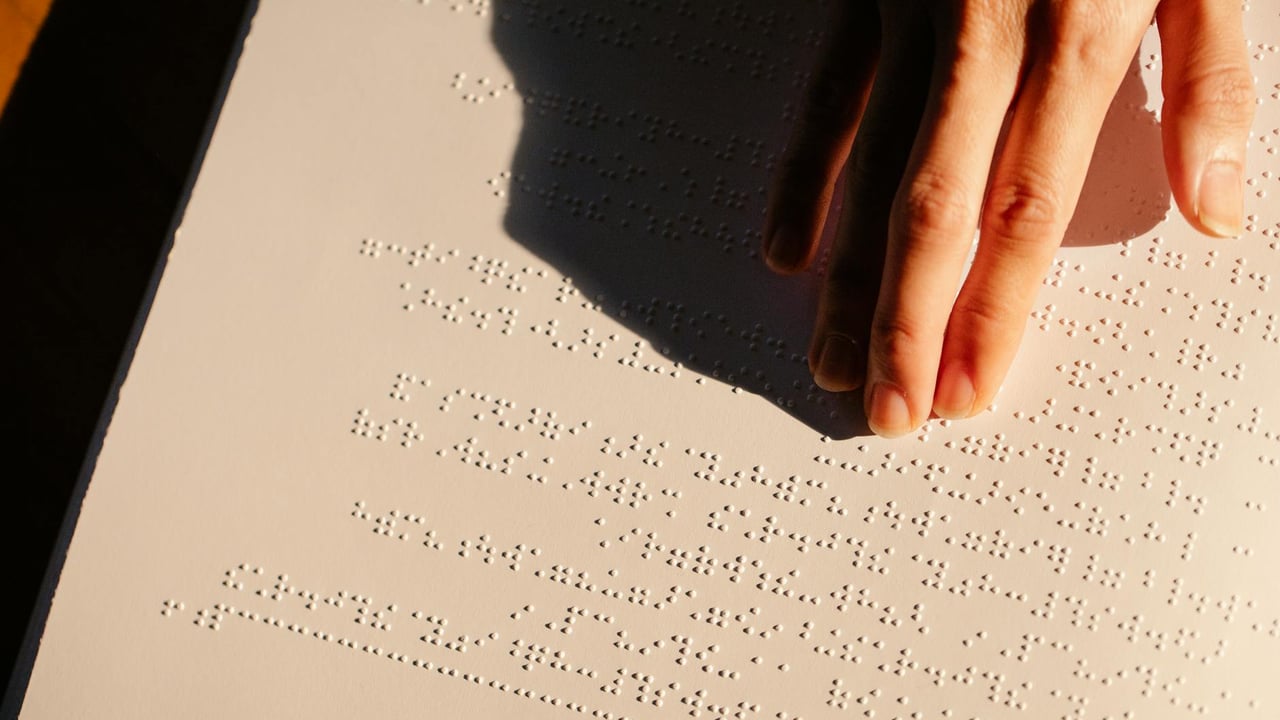

The shift toward tactile-only refinement addresses a longstanding limitation of vision‑centred manipulation. Cameras and depth sensors struggle with occlusion, reflective surfaces and cramped workspaces—conditions that commonly occur in industrial settings. By contrast, tactile sensing activates only at contact and delivers high‑resolution local information about relative position and orientation, allowing a robot to ‘feel’ its way to a secure grasp even when visual cues are absent or misleading.

TacRefineNet sits amid a broader revival of interest in embodied intelligence: research that emphasises physical interaction, proprioception and closed‑loop control. Previous progress in robotic grasping has often depended on large labelled datasets, accurate object CAD models or multi‑sensor fusion. Xiaomi’s framework sidesteps those requirements for the refinement stage, which could lower the integration cost for retrofitting robots to new tasks where collecting 3D models is impractical.

Publication of technical details and videos matters. Sharing methods publicly accelerates academic replication, invites third‑party validation and can hasten industrial adoption if integrators can adapt the software to existing hardware. Nevertheless, moving from laboratory demonstrations to high‑throughput production lines will require solving engineering challenges: tactile sensors must be rugged, contact‑based corrections can be slower than purely visual adjustments, and models must generalise across a wider range of object geometries and materials than a research paper can typically cover.

For manufacturers and robotics suppliers, TacRefineNet offers a tempting proposition: more reliable pick‑and‑place in occluded or cluttered environments without expensive vision setups or time‑consuming object modelling. For Xiaomi, the work illustrates a strategic push beyond phones and consumer devices into robotics and embodied AI, one that could pay dividends if the company couples algorithmic advances with scalable tactile hardware and seamless integration into industrial toolchains.